Misinformation. Lies. And artificial intelligence

This AI image of a casual Pope in a puffer jacket fooled the world

In March 2023 it was widely reported that a Belgian man had committed suicide on the advice of an AI chatbot in an app called Chai.

His widow provided police with chatlogs in which AI fuelled the man’s existing anxiety surrounding climate change and encouraged him to take his own life.

With the rapidly evolving power of AI, and instant accessibility to the mis/information it can provide, there is growing pressure

– including from the Australian Federal Government – for regulation.

Carolyn Semmler, Associate Professor in the School of Psychology, believes that as a society we can use AI and other technologies to help solve broader societal problems – but only once we have a firm understanding of how it works, its limitations and how we respond to it.

“The same problems keep arising, including over reliance on technical systems, and a lack of understanding from the engineers who build these systems, about how humans make decisions,” says Carolyn.

With a professional background spanning defence, law and cognitive psychology, Carolyn has seen the effects of adopting technology too early; including people being wrongfully convicted, and social media impacting people’s mental health.

The case of the man in Belgium may be a warning sign of consequences if AI is left unregulated - but with the rise of AI chatbots across popular social media apps, we are steadily gaining increased access to this technology.

What are the consequences, for example, of a young person seeking advice from an AI chatbot instead of a healthcare professional?

“People are not seeing that these chatbots are just models that have been trained on the entire internet, which in itself contains content that is misleading, false and harmful,” says Carolyn.

AI artist Cam Harless reimagined US presidents rocking mullets

The speed with which misinformation can be generated through chatbots is unprecedented: AI is often articulate, dangerously confident and highly persuasive.

With the enormous information load, and diversity of viewpoints fed into the internet daily, AI itself can’t be considered a trusted source of information when its data input lacks consensus or proper expertise.

“There are myriad psychological studies about mental health that may have been published over the last 20 years and as an expert you spend years learning how to assess the evidence for the claims made from those studies,” Carolyn says. “I know what a good study is. I know what the scientific method is. I know how statistics work.

“I can look at a study and know whether I should believe the conclusions.The average person using ChatGPT has none of my training or experience, and so they’re reliant on the program’s confidence in assessing the accuracy of that information.”

AI shows immense promise to help us overcome major challenges, but without regulation, the quality of information it provides could be harmful.

AI generated images of Donald Trump being arrested went viral

While it is clear that AI can provide dangerous misinformation for individuals, the dangers of its use in the geopolitical landscape present an even greater threat.

With the thousands of speeches, images and videos of politicians, religious figures and the like, AI has an abundance of data to draw from to generate content.

Its use so far has often been for comedic effect. Take, for example, deep fake images of the Pope donning a lavish new puffer jacket that fooled many social media users; those of Donald Trump facing a dramatic arrest upon his indictment; and former US Presidents with luscious mullets.When it comes to fake recordings, videos and images of these prominent figures, it is increasingly difficult to discern fact from fiction.

In late May this year, the Federal Government expressed the need for regulations surrounding the use of AI software.

“The upside (of AI) is massive,” says Industry and Science Minister Ed Husic. “Whether it’s fighting superbugs with new AI developed antibiotics or preventing online fraud. But as I have been saying for many years, there needs to be appropriate safeguards to ensure the ethical use of AI.”

Our politicians aren’t the only ones concerned. The so-called ‘Godfather of AI’, Geoffrey Hinton, quit his position at Google earlier this year to warn the masses of the dangers of AI’s ability to generate fake images and text, proclaiming that “the time to regulate AI is now”.

AI could soon regularly fuel the agendas and propaganda created by governments and “bad actors” around the world through mass misinformation campaigns.With the current conflict in the Ukraine, and the alleged manipulation of elections, it could be argued that this is already happening.

Keith Ransom, Postdoctoral Researcher in the School of Psychology, agrees that the issue of misinformation and what to do about it has become more complex and more pressing with the advent of sophisticated AI technologies. Keith’s main project, Monitoring and Guarding Public Information Environment, or ‘MAGPIE’, focuses on how best to protect the public information environment to ensure that reliable information spreads widely and quickly whilst unreliable information does not.

“The spread of misinformation and undue influence being exerted by hostile actors is an issue as old as time,” says Keith.

“But while propaganda isn’t a new thing, the construction methods, the industrialisation, the rate and scale of automation and dissemination, that’s new, and that’s something we need to prepare for.”

With AI comes the opportunity to craft propaganda like never before, with wars potentially taking place through campaigns based on misinformation.

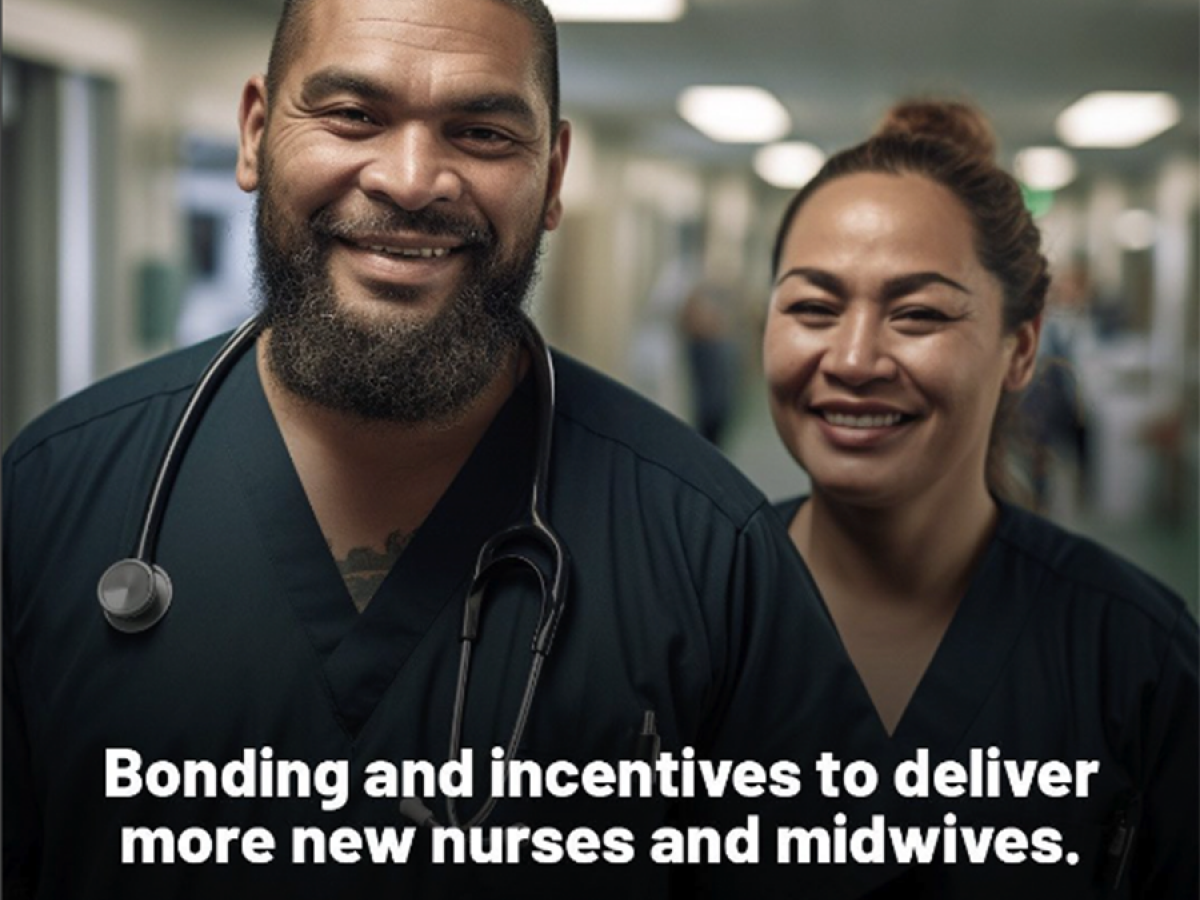

The New Zealand National party has recently admitted to the use of AI to create fake “people” for attack ads on the New Zealand Labour party.The two healthcare professionals seen in this image are entirely generated by AI - a startling example of the accuracy of readily available software

“Take a claim like ‘Ukraine should cede territory to Russia in order to cease conflict’,” Keith says. “If I give ChatGPT that claim, and I prompt it to ‘think about all the arguments that feed into that’, it can generate an argument like ‘it should because Russia has a historic claim to territories in Crimea and the Donbas’.

“So then I can take that argument and ask it ‘give me some reasons why that is’ and it can elaborate.”

Keith is hoping a situational awareness tool he is developing with Dr Rachel Stephens, Associate Professor Carolyn Semmler and Professor Lewis Mitchell will help to map, detect, and defend against hostile influence campaigns being generated and orchestrated using AI.

“The beauty of AI is that it can generate arguments and reasons for things, before anyone has even brought them up,” he says. “This gives you a planning and ‘what-if ’ capability that lets you say, ‘look, they haven’t started using that argument here, but if they do, look what happens’.”

As AI continues to improve, the situational awareness tool, in conjunction with advances in the writing capabilities of the software, can generate a rapid-fire and self-evaluating writing machine that could help analysts understand the influence of campaigns being used by malignant actors. Identification of these campaigns will help to protect democratic processes and ensure that populations are not misled as they participate in public debates and decision making.

“We just don’t know where the ability to automate influence will go. But there’s a strong reason for us to investigate it. Now is the time that we should be getting experience with these tools for these purposes as we’re pretty sure someone else is doing the same,” says Keith.

“AI shows immense promise to help us overcome major challenges, but without regulation, the quality of information it provides could be harmful,” says Carolyn.

“It’s up to us to determine the best way forward.”

Story by Isaac Freeman, Communications Assistant for the University of Adelaide, and Photographic Editor for Lumen.