VQA: Vision and language

Visual Question Answering (VQA) is a practical application for machine learning that allows computer systems to answer general questions about the content of an image. VQA combines vision and language processing with high-level reasoning.

Systems capable of answering general and diverse questions about the visual environment have direct practical utility in a wide range of applications: from digital personal assistants to aids for the visually impaired and robotics.

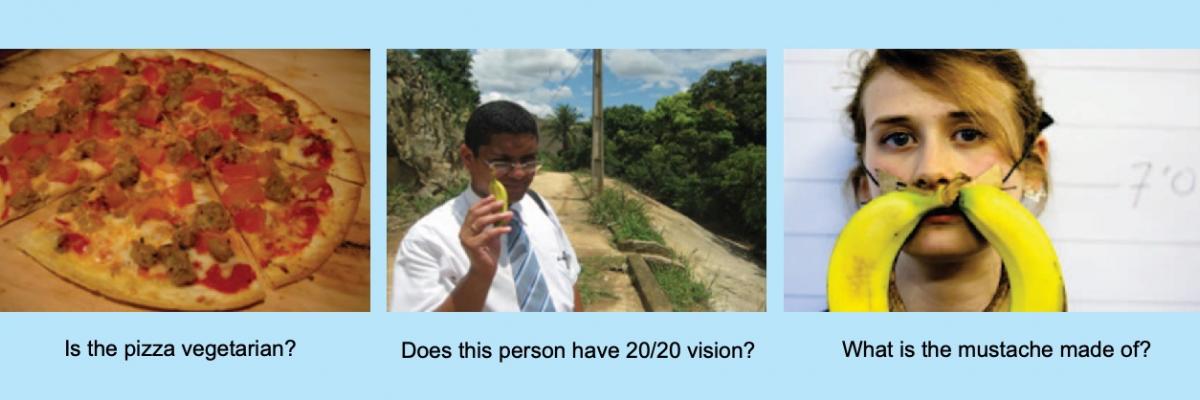

A typical instance of VQA involves the provision of an image with an associated plain text question (see examples in Figure 1). The task for the system is to determine the correct answer to the question and provide an answer; typically in a few words or a short phrase. This task, while seemingly trivial for human beings, spans the fields of computer vision and natural language processing (NLP) since it requires both the comprehension of the question and the parsing of the visual elements in the image.

Figure 1: VQA Examples

VQA is an important means for evaluating deep visual understanding, which is considered an overarching goal of the field of computer vision. Deep visual understanding is the ability of an algorithm to extract high-level information from images and to perform reasoning based on that information. In comparison to the classical tasks of computer vision, such as object recognition or image segmentation, instances of VQA cover a wide range of complexity. Indeed, the question itself can take an arbitrary form, as can the set of operations required to answer it.

-

Competitions and benchmarks

AIML has won numerous global competitions in VQA and has made major contributions to the development of the methodology.

1st Place Visual Question Answering Abstract Benchmark (Clip-art images)

REF: "Graph-Structured Representations for Visual Question Answering"

2017-2019 1st place MS COCO Image Captioning benchmark

REF: "Bottom-Up and Top-Down Attention for Image Captioning and VQA"

2017 1st place Visual Question Answering Challenge at CVPR (Conference on Computer Vision and Pattern Recognition)

REF: "Tips and Tricks for Visual Question Answering: Learnings from the 2017 Challenge"

2017 AIML has proposed the following vision and language datasets/benchmarks.

FVQA (Fact-based VQA): A dataset designed to evaluate VQA methods with deep reasoning abilities, that contains questions requiring general commonsense knowledge.

2017 "FVQA: Fact-based Visual Question Answering" Peng Wang, Qi Wu, Chunhua Shen, Anthony Dick, and Anton van den Hengel Vision-and-Language Navigation: A new task that extends concepts from VQA to simulated 3D environments, where a simulated robot can move around and observe its surrounding, with the task of following a navigation instruction (e.g. to fetch an object in another room).

2018 Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson,

Niko Sunderhauf, Ian Reid, Stephen Gould, and Anton van den Hengel -

Featured papers

2017 Damien Teney, Qi Wu, and Anton van den Hengel

In this paper we present a big-picture overview of visual question answering. It includes a range of popular approaches to the VQA problem based on deep learning.

2019 "Actively Seeking and Learning from Live Data" Damien Teney, and Anton van den Hengel We build a VQA system in which the machine itself looks for the information needed to answer a given question; in a similar way that a human would look up something on Wikipedia, or check the definition of a word in a dictionary, before giving his or her answer.

2017 "Tips and Tricks for Visual Question Answering: Learnings from the 2017 Challenge"

Damien Teney, Peter Anderson, Xiaodong He, and Anton van den Hengel

2018 "Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering"

Peter Anderson, Xiaodong He, Chris Buehler, Damien Teney,

Mark Johnson, Stephen Gould, and Lei ZhangThese two publications described the method we used to win the 2017 VQA Challenge.

2017 "FVQA: Fact-based Visual Question Answering" Peng Wang, Qi Wu, Chunhua Shen, Anthony Dick, and Anton van den Hengel

A slightly different setting for VQA where the questions require more commonsense knowledge (rather than just asking just about visual properties like the colour of a car or the number of cows in the picture).

-

Projects

Deep visual understanding: learning to see in an unruly world

Deep Learning has achieved incredible success at an astonishing variety of Computer Vision tasks recently. This project will convey this success into the challenging domain of high-level image-based reasoning. It will extend deep learning to achieve flexible semantic reasoning about the content of images based on information gleaned from the huge volumes of data available on the Internet. We will apply the method to the problem of Visual Question Answering, to demonstrate the generality and flexibility of the semantic reasoning to be achieved. The project will overcome one of the primary limitations of deep learning generally, however, and will greatly increase its already impressive domain of practical application.

Anton van den Hengel, Damien Teney